Last year I curated a series of videos for a partner project to introduce Subsquid and explain its core concepts, starting from the basics, up to some less-known features. With the new year, I decided to rewrite parts of the videos, re-adapt and re-record them to the latest changes in the SDK and create a new series that we decided to call Subsquid Academy.

Introduction

In this lesson, we are going to discuss a few features that have not been shown in previous lessons, maybe because they would not fit the project, or because, despite being relevant, they deserve a separate explanation.

We are going to start with a feature that allows developers to be able to read the state of a smart contract, by accessing the blockchain in a type-safe way.

Accessing contract state

We are going to start with our IDE open, on the ENS project from our lesson on indexing an EVM smart contract.

github.com/RaekwonIII/ethereum-name-service..

The section where we create an instance of the Contract model has hardcoded data as I mentioned in the lesson where we developed this project. Today we are going to find out how we can source this data directly from the blockchain.

For that, we need to find a different contract that stored that information on-chain. For example, we can take a look at the list of the top NFTs on Etherscan.

And we can pick Bored Ape Yacht Club because it’s quite famous, and pretty much everyone knows about it. We need to copy the address from the collection page and paste it into our code.

const contractAddress = "0xBC4CA0EdA7647A8aB7C2061c2E118A18a936f13D".toLowerCase();

And then we need to create TypeScript code from the contract ABI. We could obtain the contract’s ABI from the "Contract" tab on the collection's page on Etherscan, and create a new JSON file named bayc.json in the project, just like we did the last lesson.

But this is a good opportunity to show you another feature of Subsquid’s command line tool. If you know the smart contract’s address, and it’s deployed on Ethereum, you can avoid downloading, or copy-pasting the ABI, you can simply provide the tool with the contract’s address:

npx squid-evm-typegen src/abi 0xBC4CA0EdA7647A8aB7C2061c2E118A18a936f13D

We now have our contract events, functions, and Contract class, to interact with it on-chain. The class has the name, symbol and totalSupply functions, which we are going to use in a moment.

export class Contract extends ContractBase {

BAYC_PROVENANCE(): Promise<string> {

return this.eth_call(functions.BAYC_PROVENANCE, [])

}

MAX_APES(): Promise<ethers.BigNumber> {

return this.eth_call(functions.MAX_APES, [])

}

REVEAL_TIMESTAMP(): Promise<ethers.BigNumber> {

return this.eth_call(functions.REVEAL_TIMESTAMP, [])

}

apePrice(): Promise<ethers.BigNumber> {

return this.eth_call(functions.apePrice, [])

}

balanceOf(owner: string): Promise<ethers.BigNumber> {

return this.eth_call(functions.balanceOf, [owner])

}

baseURI(): Promise<string> {

return this.eth_call(functions.baseURI, [])

}

getApproved(tokenId: ethers.BigNumber): Promise<string> {

return this.eth_call(functions.getApproved, [tokenId])

}

isApprovedForAll(owner: string, operator: string): Promise<boolean> {

return this.eth_call(functions.isApprovedForAll, [owner, operator])

}

maxApePurchase(): Promise<ethers.BigNumber> {

return this.eth_call(functions.maxApePurchase, [])

}

name(): Promise<string> {

return this.eth_call(functions.name, [])

}

owner(): Promise<string> {

return this.eth_call(functions.owner, [])

}

ownerOf(tokenId: ethers.BigNumber): Promise<string> {

return this.eth_call(functions.ownerOf, [tokenId])

}

saleIsActive(): Promise<boolean> {

return this.eth_call(functions.saleIsActive, [])

}

startingIndex(): Promise<ethers.BigNumber> {

return this.eth_call(functions.startingIndex, [])

}

startingIndexBlock(): Promise<ethers.BigNumber> {

return this.eth_call(functions.startingIndexBlock, [])

}

supportsInterface(interfaceId: string): Promise<boolean> {

return this.eth_call(functions.supportsInterface, [interfaceId])

}

symbol(): Promise<string> {

return this.eth_call(functions.symbol, [])

}

tokenByIndex(index: ethers.BigNumber): Promise<ethers.BigNumber> {

return this.eth_call(functions.tokenByIndex, [index])

}

tokenOfOwnerByIndex(owner: string, index: ethers.BigNumber): Promise<ethers.BigNumber> {

return this.eth_call(functions.tokenOfOwnerByIndex, [owner, index])

}

tokenURI(tokenId: ethers.BigNumber): Promise<string> {

return this.eth_call(functions.tokenURI, [tokenId])

}

totalSupply(): Promise<ethers.BigNumber> {

return this.eth_call(functions.totalSupply, [])

}

}

We then need to edit processor.ts file and change the import line to import from this new file, and add the Contract class to it. We have to rename it to avoid a clash with the Contract database model:

import { events, Contract as ContractAPI, functions } from "./abi/bayc";

Now we need to work on the getOrCreateContractEntity function. We need to change the function argument, because the Contract class needs a context to be initialized, so we are going to use the BlockHandlerContext.

Let’s then create an instance of the ContractAPI class. We also need to declare and initialize the name, symbol, and totalSupply variables.

And then since the connection to the on-chain is basically an API call and it could fail, we surround it with a try/catch, so we can collect the error. Then we reassign the variables to the values coming from the API call.

When there is an error, we catch it and log it. We can also check the type of the error, so that we can log the message it brings, directly. We then need to assign these values to the class, removing hardcoded values.

Here is the final result:

let contractEntity: Contract | undefined;

export async function getOrCreateContractEntity(

ctx: BlockHandlerContext<Store>

): Promise<Contract> {

if (contractEntity == null) {

contractEntity = await ctx.store.get(Contract, contractAddress);

if (contractEntity == null) {

const contractAPI = new ContractAPI(ctx, contractAddress);

let name = "", symbol = "", totalSupply = BigNumber.from(0);

try {

name = await contractAPI.name();

symbol = await contractAPI.symbol();

totalSupply = await contractAPI.totalSupply();

} catch (error) {

ctx.log.warn(`[API] Error while fetching Contract metadata for address ${contractAddress}`);

if (error instanceof Error) {

ctx.log.warn(`${error.message}`);

}

}

contractEntity = new Contract({

id: contractAddress,

name: name,

symbol: symbol,

totalSupply: totalSupply.toBigInt(),

});

await ctx.store.insert(contractEntity);

}

}

return contractEntity;

}

It’s now time to run our project and verify it.

We need to make sure that the environment variable has the right value for the RPC endpoint in the .env file, like in the past lesson. Because we are going to use that endpoint to contact the blockchain and query the contract.

DB_NAME=squid

DB_PORT=23798

GQL_PORT=4350

# JSON-RPC node endpoint, both wss and https endpoints are accepted

RPC_ENDPOINT="https://rpc.ankr.com/eth"

Let’s now build the code, start the Postgres container, and launch the processor, in this sequence:

make build

make up

make process

We can also launch the GraphQL server, in a separate terminal window:

make serve

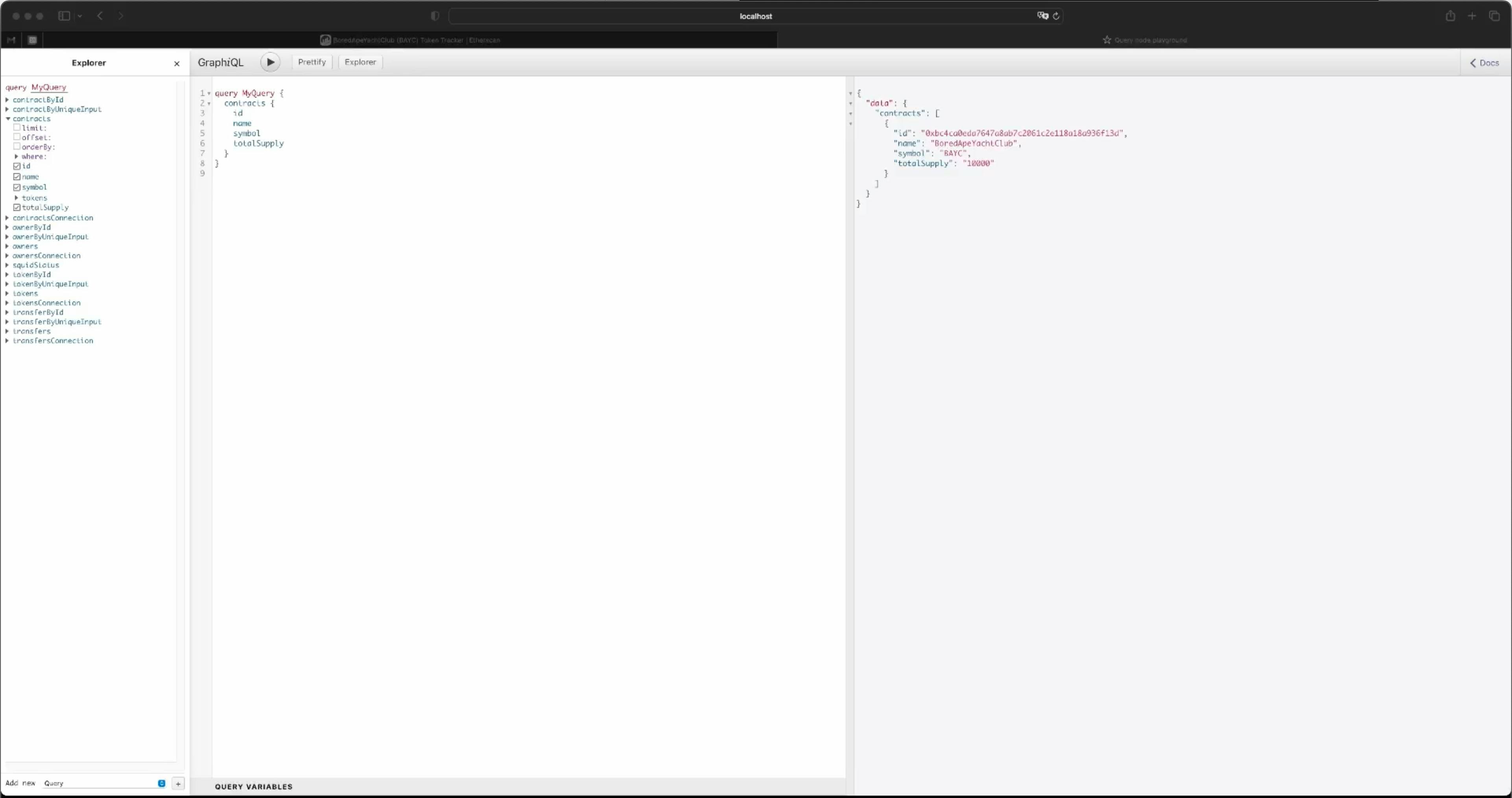

Then, let's open the browser, and verify that the Contract data is there, and it’s exactly like shown in Etherscan.

Because this specific contract, like most NFTs, has a tokenURL function, we could use this very same call to the on-chain contract to fetch it and be able to store the token metadata and image.

But RPC calls are very slow, they are the whole reason why we index in the first place, and why Subsquid has created the Archive data ingestion service. So bombarding the blockchain nodes with RPC requests to fetch the token URL is bad practice, and leads to bad indexing performance.

Luckily for us, there is a solution to this, that will help you obtain this data while keeping your indexing speed and efficiency at a very, very good level. This is what is called a “Multicall contract”, and we’ll see it in a moment.

Multicall

In this section, we'll go through how to get contract status information in bulk, via a Multicall contract. Let’s open the console window and type

npx squid-evm-typegen --help

Here we can see that one of the options is multicall. But what is it?

If we search for Multicall on Etherscan, we can see a couple of contracts.

For this project, we are going to use the one from Uniswap. Multicall contracts allow you to make a single call to them, and perform multiple queries at the same time, saving a lot of overhead from multiple requests.

We can use the same Command line tool, but we can omit the ABI, or the address, and we only need to specify the --multicall option.

npx squid-evm-typegen src/abi --multicall

This will generate a TypeScript facade, similar to the other times we did that, but it will be slightly different because the Multicall class offers different functions.

export class Multicall extends ContractBase {

static aggregate = aggregate

static try_aggregate = try_aggregate

aggregate<Args extends any[], R>(

func: Func<Args, {}, R>,

address: string,

calls: Args[],

paging?: number

): Promise<R[]>

aggregate<Args extends any[], R>(

func: Func<Args, {}, R>,

calls: [address: string, args: Args][],

paging?: number

): Promise<R[]>

aggregate(

calls: [func: AnyFunc, address: string, args: any[]][],

paging?: number

): Promise<any[]>

async aggregate(...args: any[]): Promise<any[]> {

let [calls, funcs, page] = this.makeCalls(args)

let size = calls.length

let results = new Array(size)

for (let [from, to] of splitIntoPages(size, page)) {

let {returnData} = await this.eth_call(aggregate, [calls.slice(from, to)])

for (let i = from; i < to; i++) {

let data = returnData[i - from]

results[i] = funcs[i].decodeResult(data)

}

}

return results

}

tryAggregate<Args extends any[], R>(

func: Func<Args, {}, R>,

address: string,

calls: Args[],

paging?: number

): Promise<MulticallResult<R>[]>

tryAggregate<Args extends any[], R>(

func: Func<Args, {}, R>,

calls: [address: string, args: Args][],

paging?: number

): Promise<MulticallResult<R>[]>

tryAggregate(

calls: [func: AnyFunc, address: string, args: any[]][],

paging?: number

): Promise<MulticallResult<any>[]>

async tryAggregate(...args: any[]): Promise<any[]> {

let [calls, funcs, page] = this.makeCalls(args)

let size = calls.length

let results = new Array(size)

for (let [from, to] of splitIntoPages(size, page)) {

let response = await this.eth_call(try_aggregate, [false, calls.slice(from, to)])

for (let i = from; i < to; i++) {

let res = response[i - from]

if (res.success) {

try {

results[i] = {

success: true,

value: funcs[i].decodeResult(res.returnData)

}

} catch(err: any) {

results[i] = {success: false, returnData: res.returnData}

}

} else {

results[i] = {success: false}

}

}

}

return results

}

private makeCalls(args: any[]): [calls: Call[], funcs: AnyFunc[], page: number] {

let page = typeof args[args.length-1] == 'number' ? args.pop()! : Number.MAX_SAFE_INTEGER

switch(args.length) {

case 1: {

let list: [func: AnyFunc, address: string, args: any[]][] = args[0]

let calls = new Array(list.length)

let funcs = new Array(list.length)

for (let i = 0; i < list.length; i++) {

let [func, address, args] = list[i]

calls[i] = [address, func.encode(args)]

funcs[i] = func

}

return [calls, funcs, page]

}

case 2: {

let func: AnyFunc = args[0]

let list: [address: string, args: any[]][] = args[1]

let calls = new Array(list.length)

let funcs = new Array(list.length)

for (let i = 0; i < list.length; i++) {

let [address, args] = list[i]

calls[i] = [address, func.encode(args)]

funcs[i] = func

}

return [calls, funcs, page]

}

case 3: {

let func: AnyFunc = args[0]

let address: string = args[1]

let list: any[][] = args[2]

let calls = new Array(list.length)

let funcs = new Array(list.length)

for (let i = 0; i < list.length; i++) {

let args = list[i]

calls[i] = [address, func.encode(args)]

funcs[i] = func

}

return [calls, funcs, page]

}

default:

throw new Error('unexpected number of arguments')

}

}

}

Now, let’s open the GraphQL schema because the objective of this project is to fetch the token metadata URL, so we need a field to store it, and we are going to add it to the schema.

type Token @entity {

id: ID!

owner: Owner

uri: String

transfers: [Transfer!]! @derivedFrom(field: "token")

contract: Contract

}

type Owner @entity {

id: ID!

ownedTokens: [Token!] @derivedFrom(field: "owner")

}

type Contract @entity {

id: ID!

name: String! @index

symbol: String!

totalSupply: BigInt!

tokens: [Token!]! @derivedFrom(field: "contract")

}

type Transfer @entity {

id: ID!

token: Token!

from: Owner

to: Owner

timestamp: DateTime! @index

block: Int! @index

transactionHash: String!

}

And, as usual, we need to re-generate the models, after changing the schema:

make codegen

We need to install a couple of new libraries, so in our console window, let’s type

npm i lodash @types/lodash

And now we are going to open processor.ts, add a new constant for the multicall contract address, as well as import a function from the lodash library we just installed:

import { maxBy } from "lodash";

import { Multicall } from "./abi/multicall";

We now need to head over to the section where we create a new Token instance, inside the saveENSData function, which we should rename saveBAYCData, same as all the other variables containing ENS in their name.

Let’s add the new uri field there, and assign an empty string to it, for the moment.

At the bottom of the function, at the end of the loop, before saving all the model sets, we will perform one single call to the Multicall contract, to fetch the URL of all the new tokens we have encountered.

We need to calculate the latest block number in the batch we received, and this is where the lodash function is useful to us.

Then we create an instance of the Multicall class, passing it the context, and the block height we just calculated, as well as the contract address.

We can add a log line, which will give us a sense of how long each call is taking

We can then collect all the results of a multicall api call in a constant. I am using the tryAggregate function of the smart contract to aggregate the tokenURI function of the BAYC NFT contract, for each of the tokens found in the data array, together with the address of the NFT contract itself.

Then, for each result, we search the related item in the data array, and use it to fetch the corresponding token.

Then we process the result and assign the correct value to a url variable.

When this is done, we can assign the url to the url field of the Token model. The save statements at the bottom will make sure these changes are persisted in the database.

Here is the final result:

import { Store, TypeormDatabase } from "@subsquid/typeorm-store";

import {BlockHandlerContext, EvmBatchProcessor, LogHandlerContext} from '@subsquid/evm-processor'

import { events, Contract as ContractAPI, functions } from "./abi/bayc";

import { Contract, Owner, Token, Transfer } from "./model";

import { In } from "typeorm";

import { BigNumber } from "ethers";

import { maxBy } from "lodash";

import { Multicall } from "./abi/multicall";

const contractAddress =

"0xBC4CA0EdA7647A8aB7C2061c2E118A18a936f13D".toLowerCase();

const multicallAddress = "0x5ba1e12693dc8f9c48aad8770482f4739beed696".toLowerCase();

const processor = new EvmBatchProcessor()

.setDataSource({

chain: process.env.RPC_ENDPOINT,

archive: "https://eth.archive.subsquid.io",

})

.addLog(contractAddress, {

filter: [

[

events.Transfer.topic,

],

],

data: {

evmLog: {

topics: true,

data: true,

},

transaction: {

hash: true,

},

},

});

processor.run(new TypeormDatabase(), async (ctx) => {

const baycDataArr: BAYCData[] = [];

for (let c of ctx.blocks) {

for (let i of c.items) {

if (i.address === contractAddress && i.kind === "evmLog") {

if (i.evmLog.topics[0] === events.Transfer.topic) {

const baycData = handleTransfer({

...ctx,

block: c.header,

...i,

});

baycDataArr.push(baycData);

}

}

}

}

await saveBAYCData(

{

...ctx,

block: ctx.blocks[ctx.blocks.length - 1].header,

},

baycDataArr

);

});

let contractEntity: Contract | undefined;

export async function getOrCreateContractEntity(

ctx: BlockHandlerContext<Store>

): Promise<Contract> {

if (contractEntity == null) {

contractEntity = await ctx.store.get(Contract, contractAddress);

if (contractEntity == null) {

const contractAPI = new ContractAPI(ctx, contractAddress);

let name = "", symbol = "", totalSupply = BigNumber.from(0);

try {

name = await contractAPI.name();

symbol = await contractAPI.symbol();

totalSupply = await contractAPI.totalSupply();

} catch (error) {

ctx.log.warn(`[API] Error while fetching Contract metadata for address ${contractAddress}`);

if (error instanceof Error) {

ctx.log.warn(`${error.message}`);

}

}

contractEntity = new Contract({

id: contractAddress,

name: name,

symbol: symbol,

totalSupply: totalSupply.toBigInt(),

});

await ctx.store.insert(contractEntity);

}

}

return contractEntity;

}

type BAYCData = {

id: string;

from: string;

to: string;

tokenId: bigint;

timestamp: Date;

block: number;

transactionHash: string;

};

function handleTransfer(

ctx: LogHandlerContext<

Store,

{ evmLog: { topics: true; data: true }; transaction: { hash: true } }

>

): BAYCData {

const { evmLog, block, transaction } = ctx;

const { from, to, tokenId } = events.Transfer.decode(evmLog);

const baycData: BAYCData = {

id: `${transaction.hash}-${evmLog.address}-${tokenId.toBigInt()}-${

evmLog.index

}`,

from,

to,

tokenId: tokenId.toBigInt(),

timestamp: new Date(block.timestamp),

block: block.height,

transactionHash: transaction.hash,

};

return baycData;

}

async function saveBAYCData(

ctx: BlockHandlerContext<Store>,

baycDataArr: BAYCData[]

) {

const tokensIds: Set<string> = new Set();

const ownersIds: Set<string> = new Set();

for (const baycData of baycDataArr) {

tokensIds.add(baycData.tokenId.toString());

if (baycData.from) ownersIds.add(baycData.from.toLowerCase());

if (baycData.to) ownersIds.add(baycData.to.toLowerCase());

}

const transfers: Set<Transfer> = new Set();

const tokens: Map<string, Token> = new Map(

(await ctx.store.findBy(Token, { id: In([...tokensIds]) })).map((token) => [

token.id,

token,

])

);

const owners: Map<string, Owner> = new Map(

(await ctx.store.findBy(Owner, { id: In([...ownersIds]) })).map((owner) => [

owner.id,

owner,

])

);

for (const baycData of baycDataArr) {

const {

id,

tokenId,

from,

to,

block,

transactionHash,

timestamp,

} = baycData;

let fromOwner = owners.get(from);

if (fromOwner == null) {

fromOwner = new Owner({ id: from.toLowerCase() });

owners.set(fromOwner.id, fromOwner);

}

let toOwner = owners.get(to);

if (toOwner == null) {

toOwner = new Owner({ id: to.toLowerCase() });

owners.set(toOwner.id, toOwner);

}

const tokenIdString = tokenId.toString();

let token = tokens.get(tokenIdString);

if (token == null) {

token = new Token({

id: tokenIdString,

uri: "", // will be filled-in by Multicall

contract: await getOrCreateContractEntity(ctx),

});

tokens.set(token.id, token);

}

token.owner = toOwner;

if (toOwner && fromOwner) {

const transfer = new Transfer({

id,

block,

timestamp,

transactionHash,

from: fromOwner,

to: toOwner,

token,

});

transfers.add(transfer);

}

}

const maxHeight = maxBy(baycDataArr, data => data.block)!.block;

const multicall = new Multicall(ctx, {height: maxHeight}, multicallAddress);

ctx.log.info(`Calling multicall for ${baycDataArr.length} tokens...`);

const results = await multicall.tryAggregate(functions.tokenURI, baycDataArr.map(data => [contractAddress, [BigNumber.from(data.tokenId)]] as [string, BigNumber[]]), 100);

results.forEach((res, i) => {

let t = tokens.get(baycDataArr[i].tokenId.toString());

if (t) {

let uri = '';

if (res.success) {

uri = <string>res.value;

} else if (res.returnData) {

uri = <string>functions.tokenURI.tryDecodeResult(res.returnData) || '';

}

t.uri = uri;

}

})

ctx.log.info(`Done`);

await ctx.store.save([...owners.values()]);

await ctx.store.save([...tokens.values()]);

await ctx.store.save([...transfers]);

}

Because we changed the schema, we need to create a new migration, and we have to delete the old one first.

Let’s open the terminal and build our code, then we can spin up the database container, and generate the migration:

make build

make up

make migration

Finally, it’s time to launch the processor.

make process

We can launch the graphql server as usual, even if the indexing is not finished yet.

make serve

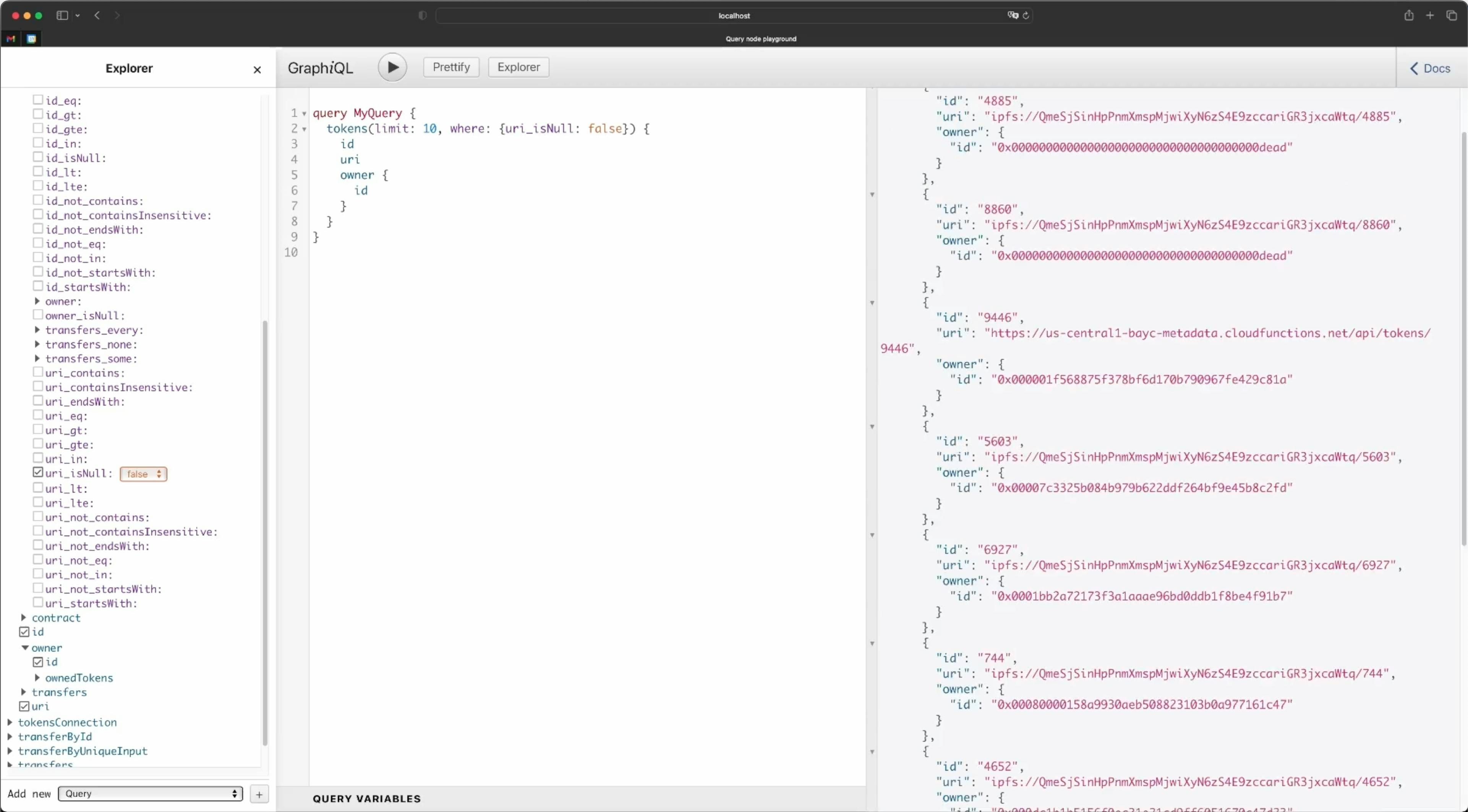

Let’s open the browser at the address we saw last time: localhost:4350/graphql and test our work so far.

Let’s find all the tokens that have a value in the uri field, and get some information about them, including the uri itself:

query MyQuery {

tokens(limit: 10, where: {uri_isNull: false}) {

id

uri

owner {

id

{

}

}

We can consider what we built today as a success, as these tokens have a url.

We can actually visit that address in our browser, and find the metadata of this token, including the image url.

In the next section, we are going to find out how we can query any arbitrary external api, and fetch the metadata, to save the image URL. Thanks to the flexibility of Subsquid’s SDK, we can augment the data we are indexing, and this can be applied to any API.

Augment on-chain data via external APIs

In this section we are going to discuss augmenting on-chain data, using data from external APIs.

This is not a "feature" per se, it does not have a dedicated docs page, but it needs to be discussed, because it’s an additional degree of freedom for developers, and it’s something that a lot of people might not expect to be possible.

First of all, I want to discuss a possible use case for this: you could easily develop a project that tracks the transfers of the balance of certain, or multiple tokens.

But what if you wanted to also store the historical price in FIAT currency of the token at the moment of the transaction? This information does not come from the blockchain, it needs to be sourced elsewhere. With Subsquid, you could obtain these prices from services like Coingecko, or similar, and augment the transfer data with FIAT prices.

Another example is what we just finished implementing in the previous chapter.

We edited our project and went from indexing Ethereum Name Service tokens to indexing Bored Ape Yacht Club NFTs. This is already a great showcase of the flexibility of the SDK and I hope it showed how easy it was to make changes.

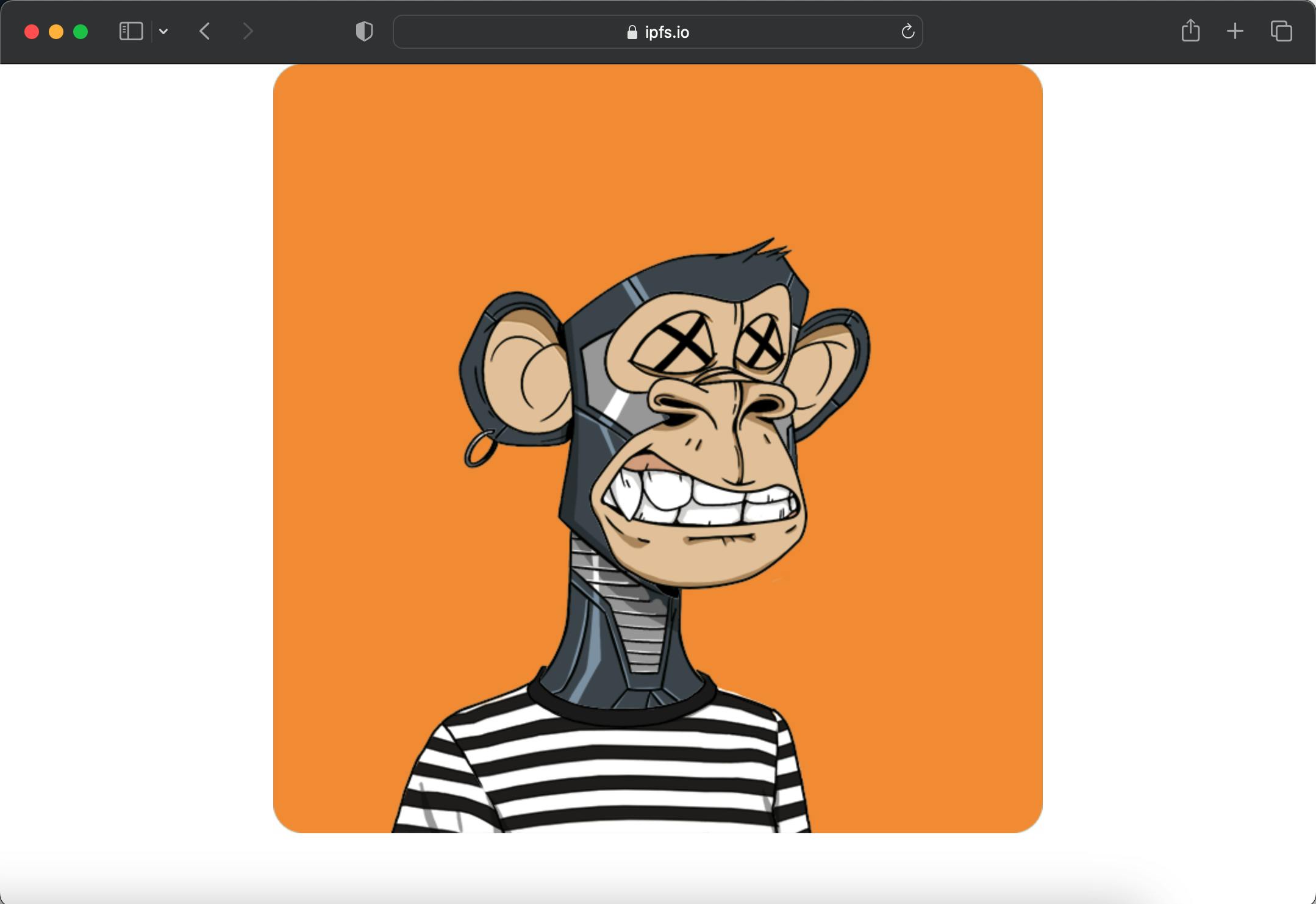

We also accessed the contract metadata from the blockchain, and used a Multicall contract, to obtain the URL of the metadata of each token. This contains useful information, and most importantly, the NFT image.

{

"image": "https://ipfs.io/ipfs/QmRRPWG96cmgTn2qSzjwr2qvfNEuhunv6FNeMFGa9bx6mQ",

"attributes": [

{ "trait_type": "Fur", "value": "Robot" },

{ "trait_type": "Clothes", "value": "Striped Tee" },

{ "trait_type": "Background", "value": "Orange" },

{ "trait_type": "Eyes", "value": "X Eyes" },

{ "trait_type": "Earring", "value": "Silver Hoop" },

{ "trait_type": "Mouth", "value": "Discomfort" }

]

}

Let’s save it for later, we are going to use it in a moment.

An improvement to our indexer project would be, for example, to collect the metadata file at the given tokenURL, parse it, and store the image URL on the database. This requires calling an endpoint that is external to the blockchain, just like calling an API from Coingecko, for example.

In our case, this will substantially increase the performance of any web application that wants to show these NFTs, because image locations are pre-fetched and stored in a database. And that’s the right way to build a performant and scalable web3 frontend.

Let’s start implementing this. We need to open the schema file and add a new field to store the image URL.

type Token @entity {

id: ID!

owner: Owner

uri: String

imageUrl: String

transfers: [Transfer!]! @derivedFrom(field: "token")

contract: Contract

}

type Owner @entity {

id: ID!

ownedTokens: [Token!] @derivedFrom(field: "owner")

}

type Contract @entity {

id: ID!

name: String! @index

symbol: String!

totalSupply: BigInt!

tokens: [Token!]! @derivedFrom(field: "contract")

}

type Transfer @entity {

id: ID!

token: Token!

from: Owner

to: Owner

timestamp: DateTime! @index

block: Int! @index

transactionHash: String!

}

Next, if the processor was still running, we need to stop it, to generate updated model classes for our schema.

make codegen

And we need to install a library to perform API requests. For this, we can use axios, for example.

npm i axios

Let's open the processor.ts file. Right at the top of the file, we can create an instance of the axios API library. Also, to prevent requesting metadata when we already have the image URL, we can build a map token IDs to the related image URL, that will act as a cache. It could be done better, but it will still improve performance and obtain great results with little effort.

const tokenIdToImageUrl = new Map<string, string> ();

export const api = Axios.create({

// baseURL: BASE_URL,

headers: {

'Content-Type': 'application/json',

},

withCredentials: false,

timeout: 5000,

httpsAgent: new https.Agent({ keepAlive: true }),

})

In the area of code where we fetch token URLs with the Multicall contract interface, we want to check if our cache has the image URL or not.

We can collect all the tokens missing an image in an array, right outside the forEach loop.

Then, right after assigning the token uri to the homonymous model field, we can verify if our cache has the element. If it doesn’t, we add the id to the list.

const tokensWithNoImage: string[] = [];

results.forEach((res, i) => {

let t = tokens.get(baycDataArr[i].tokenId.toString());

if (t) {

let uri = '';

if (res.success) {

uri = <string>res.value;

} else if (res.returnData) {

uri = <string>functions.tokenURI.tryDecodeResult(res.returnData) || '';

}

t.uri = uri;

if (!tokenIdToImageUrl.has(t.id)) tokensWithNoImage.push(t.id);

}

})

ctx.log.info(`Done`);

Right at the end of the loop, before saving all the models, we will fetch the metadata for all the tokens in the list. We need to wrap everything in a Promise.all(), because we are going to wait for all the asynchronous functions to be done.

For each item in the list, we execute an asynchronous function that collects the token, given its id.

Then we need to add some conditions because we could fail to find the id in the tokens set, and because the uri field could be empty.

Next, the API call could fail, or throw an error, so we want to wrap it in a try/catch statement.

We perform a GET request on the URL of the token, containing its metadata, then set the image field of the response to our cache, and to the imageurl of the token model.

In the catch statement, we can simply log the error.

await Promise.all(tokensWithNoImage.map( async (id) => {

const t = tokens.get(id);

if (t && t.uri) {

try {

const res = await api.get(t.uri);

tokenIdToImageUrl.set(id, res.data.image);

t.imageUrl = res.data.image;

}

catch (error) {

console.log(error);

}

}

}))

If you want to verify, you can check the entire processor.ts file here:

import { Store, TypeormDatabase } from "@subsquid/typeorm-store";

import {BlockHandlerContext, EvmBatchProcessor, LogHandlerContext} from '@subsquid/evm-processor'

import { events, Contract as ContractAPI, functions } from "./abi/bayc";

import { Contract, Owner, Token, Transfer } from "./model";

import { In } from "typeorm";

import { BigNumber } from "ethers";

import { maxBy } from "lodash";

import { Multicall } from "./abi/multicall";

import Axios from "axios";

import https from 'https';

const contractAddress =

// "0x57f1887a8BF19b14fC0dF6Fd9B2acc9Af147eA85".toLowerCase();

//BAYC

"0xBC4CA0EdA7647A8aB7C2061c2E118A18a936f13D".toLowerCase();

const multicallAddress = "0x5ba1e12693dc8f9c48aad8770482f4739beed696".toLowerCase();

const tokenIdToImageUrl = new Map<string, string> ();

export const api = Axios.create({

// baseURL: BASE_URL,

headers: {

'Content-Type': 'application/json',

},

withCredentials: false,

timeout: 5000,

httpsAgent: new https.Agent({ keepAlive: true }),

})

const processor = new EvmBatchProcessor()

.setDataSource({

chain: process.env.RPC_ENDPOINT,

archive: "https://eth.archive.subsquid.io",

})

.addLog(contractAddress, {

filter: [

[

events.Transfer.topic,

],

],

data: {

evmLog: {

topics: true,

data: true,

},

transaction: {

hash: true,

},

},

});

processor.run(new TypeormDatabase(), async (ctx) => {

const baycDataArr: BAYCData[] = [];

for (let c of ctx.blocks) {

for (let i of c.items) {

if (i.address === contractAddress && i.kind === "evmLog") {

if (i.evmLog.topics[0] === events.Transfer.topic) {

const baycData = handleTransfer({

...ctx,

block: c.header,

...i,

});

baycDataArr.push(baycData);

}

}

}

}

await saveBAYCData(

{

...ctx,

block: ctx.blocks[ctx.blocks.length - 1].header,

},

baycDataArr

);

});

let contractEntity: Contract | undefined;

export async function getOrCreateContractEntity(

ctx: BlockHandlerContext<Store>

): Promise<Contract> {

if (contractEntity == null) {

contractEntity = await ctx.store.get(Contract, contractAddress);

if (contractEntity == null) {

const contractAPI = new ContractAPI(ctx, contractAddress);

let name = "", symbol = "", totalSupply = BigNumber.from(0);

try {

name = await contractAPI.name();

symbol = await contractAPI.symbol();

totalSupply = await contractAPI.totalSupply();

} catch (error) {

ctx.log.warn(`[API] Error while fetching Contract metadata for address ${contractAddress}`);

if (error instanceof Error) {

ctx.log.warn(`${error.message}`);

}

}

contractEntity = new Contract({

id: contractAddress,

name: name,

symbol: symbol,

totalSupply: totalSupply.toBigInt(),

});

await ctx.store.insert(contractEntity);

}

}

return contractEntity;

}

type BAYCData = {

id: string;

from: string;

to: string;

tokenId: bigint;

timestamp: bigint;

block: number;

transactionHash: string;

};

function handleTransfer(

ctx: LogHandlerContext<

Store,

{ evmLog: { topics: true; data: true }; transaction: { hash: true } }

>

): BAYCData {

const { evmLog, block, transaction } = ctx;

const { from, to, tokenId } = events.Transfer.decode(evmLog);

const baycData: BAYCData = {

id: `${transaction.hash}-${evmLog.address}-${tokenId.toBigInt()}-${

evmLog.index

}`,

from,

to,

tokenId: tokenId.toBigInt(),

timestamp: BigInt(block.timestamp),

block: block.height,

transactionHash: transaction.hash,

};

return baycData;

}

async function saveBAYCData(

ctx: BlockHandlerContext<Store>,

baycDataArr: BAYCData[]

) {

const tokensIds: Set<string> = new Set();

const ownersIds: Set<string> = new Set();

for (const baycData of baycDataArr) {

tokensIds.add(baycData.tokenId.toString());

if (baycData.from) ownersIds.add(baycData.from.toLowerCase());

if (baycData.to) ownersIds.add(baycData.to.toLowerCase());

}

const transfers: Set<Transfer> = new Set();

const tokens: Map<string, Token> = new Map(

(await ctx.store.findBy(Token, { id: In([...tokensIds]) })).map((token) => [

token.id,

token,

])

);

const owners: Map<string, Owner> = new Map(

(await ctx.store.findBy(Owner, { id: In([...ownersIds]) })).map((owner) => [

owner.id,

owner,

])

);

for (const baycData of baycDataArr) {

const {

id,

tokenId,

from,

to,

block,

transactionHash,

timestamp,

} = baycData;

let fromOwner = owners.get(from);

if (fromOwner == null) {

fromOwner = new Owner({ id: from.toLowerCase() });

owners.set(fromOwner.id, fromOwner);

}

let toOwner = owners.get(to);

if (toOwner == null) {

toOwner = new Owner({ id: to.toLowerCase() });

owners.set(toOwner.id, toOwner);

}

const tokenIdString = tokenId.toString();

let token = tokens.get(tokenIdString);

if (token == null) {

token = new Token({

id: tokenIdString,

uri: "", // will be filled-in by Multicall

contract: await getOrCreateContractEntity(ctx),

});

tokens.set(token.id, token);

}

token.owner = toOwner;

if (toOwner && fromOwner) {

const transfer = new Transfer({

id,

block,

timestamp,

transactionHash,

from: fromOwner,

to: toOwner,

token,

});

transfers.add(transfer);

}

}

const maxHeight = maxBy(baycDataArr, data => data.block)!.block;

const multicall = new Multicall(ctx, {height: maxHeight}, multicallAddress);

ctx.log.info(`Calling multicall for ${baycDataArr.length} tokens...`);

const results = await multicall.tryAggregate(functions.tokenURI, baycDataArr.map(data => [contractAddress, [BigNumber.from(data.tokenId)]] as [string, BigNumber[]]), 100);

const tokensWithNoImage: string[] = [];

results.forEach((res, i) => {

let t = tokens.get(baycDataArr[i].tokenId.toString());

if (t) {

let uri = '';

if (res.success) {

uri = <string>res.value;

} else if (res.returnData) {

uri = <string>functions.tokenURI.tryDecodeResult(res.returnData) || '';

}

t.uri = uri;

if (!tokenIdToImageUrl.has(t.id)) tokensWithNoImage.push(t.id);

}

})

ctx.log.info(`Done`);

await Promise.all(tokensWithNoImage.map( async (id) => {

const t = tokens.get(id);

if (t && t.uri) {

try {

const res = await api.get(t.uri);

tokenIdToImageUrl.set(id, res.data.image);

t.imageUrl = res.data.image;

}

catch (error) {

console.log(error);

}

}

}))

await ctx.store.save([...owners.values()]);

await ctx.store.save([...tokens.values()]);

await ctx.store.save([...transfers]);

}

We are ready to test our code, but since we changed the schema, we need to start a new database container, delete the old migration, and create a new one.

make down

make up

make migration

Always remember to build, and then we can start the processor.

make build

make process

While it’s making the initial Multicall, which is quite substantial, we can start the GraphQL server as well.

make serve

When the first Multicall is done, we can already head over to the browser, to the GraphQL playground page, and test what we have done so far.

Let’s select the first 10 tokens where we already have an imageURL and pick one at random.

query MyQuery {

tokens(limit: 10, where {imageUrl_isNull: false}) {

id

imageUrl

uri

}

}

Let’s copy the image from the field and paste the URL in another tab, and that’s our bored ape.

In conclusion, we have proven to be able to fully index the Bored Ape Yacht Club NFT collection, as well as fetch their metadata, including their token URL, and use API calls to these endpoints to locally save the URLs of the images.

So, in this video, we learned that Subsquid SDK gives you the freedom to use external libraries and perform API calls to augment your on-chain data.

Extensions via custom resolvers

In this lesson, we went over some additional, advanced features that deserved a separate video.

In this specific section, we are going to discuss extending the GraphQL server with custom resolvers.

This feature will allow you to develop arbitrary custom queries that can go far beyond those automatically created with the schema you defined.

At this point, I want to mention that this, as well as many of the other topics explained throughout this series, has a dedicated page in our docs page, ad docs.subsquid.io

So far, we have collected transfer information for the Bored Ape Yacht Club NFTs, as well as ownership and metadata. And we have just finished collecting the tokens images, from off-chain endpoints.

Let’s now imagine we want to do some basic analysis, and aggregate the number of transfers of these tokens on a given day.

We could do it in the processor, while indexing, but it requires jumping through some hoops to do that.

Instead, since all the necessary data is already saved on the database, we can create a server extension and perform a custom query, that my GraphQL server will resolve for me.

The first thing we need to do is to open the schema and change the type of timestamp from a BigInt to a DateTime. We also want to add an index to increase the performance of what we are going to do.

Next, because we changed the schema, we need to regenerate the typescript models. Let’s open a terminal and launch

make codegen

Then, in our processor, we need to save the data in the new format. Let’s start by changing the type on the data interface to Date .

Then let’s change the way we add timestamp to the data object while indexing, by creating a new Date object instance.

Now we can finally implement the extension. Under source, let’s create a folder named server-extension and create a subfolder named resolvers. Inside this subfolder, we can create a file named index.ts

Now we need to install a library that has the types we are going to use in our server extension. Let’s open the terminal and run the command

npm i type-graphql

In our index.ts file, we start by adding some imports at the top of the file, we’ll be using them later. If you have a smart IDE, it might be able to auto-suggest these as you type your code.

import 'reflect-metadata'

import type { EntityManager } from 'typeorm'

import { Field, ObjectType, Query, Resolver } from 'type-graphql'

import { Transfer } from '../../model'

Our first class is decorated with the ObjectType decorator and it’s going to be the return object of the query. So we need to add the day and count fields, and decorate them as Fields. Let’s also implement the constructor for the class.

@ObjectType()

export class TransfersDayData {

@Field(() => Date, { nullable: false })

day!: Date

@Field(() => Number, {nullable: false})

count!: number

constructor(props: Partial<TransfersDayData>) {

Object.assign(this, props)

}

}

The next class is decorated with the Resolver decorator, because it’s in charge of performing the actual query and returning the result we just created.

So we need to define the class constructor, which is going to receive the entity manager as an argument. This way it’s going to be able to interact with the database directly.

We now have to implement a method, that is decorated with the Query decorator, and returns an array of the return object we defined above.

This method is what is going to be executed when we request the data and we are going to find it in the GraphQL playground as a query.

It needs to instantiate the EntityManager, and get access to the table we are interested in, which is the Transfer entity.

Next, we have our data, which is an array of objects that have the day, and count fields, because that’s what we said we want to obtain: the number of transfers for each day.

And this is where we perform our query. We select the timestamp and convert it to a day, we count the transfers from the transfer table and we group and order by day.

It’s a relatively simple SQL query, but if you are not familiar with it, there is plenty of learning material available on the internet.

In order to return the correct object, we need to convert our data to the TransfersDayData object, with this arrow function.

@Resolver()

export class TransfersDayDataResolver {

constructor(private tx: () => Promise<EntityManager>) {}

@Query(()=>[TransfersDayData])

async getTransfersDayData(): Promise<TransfersDayData[]> {

const manager = await this.tx()

const repository = manager.getRepository(Transfer)

const data: {

day: string

count: number

}[] = await repository.query(`

SELECT DATE(timestamp) AS day, COUNT(*) as count

FROM transfer

GROUP BY day

ORDER BY day DESC

`)

return data.map(

(i) => new TransfersDayData({

day: new Date(i.day),

count: i.count

})

)

}

}

Now that we are done coding, we need to delete the old migration, then we can launch a fresh database container and create our new migration file:

make down

make up

make migration

Then we need to build our code:

make build

And launch the processor:

make process

It’s starting to process the tokens: as you know, it’s requesting token metadata to the Multicall contract, and fetching the token image from these endpoints, so it might take a moment. While it’s doing this, we can launch the GraphQL server.

make serve

Let’s head over to the browser, to see this query in action. We need to visit the usual address:

And here we have our new query. We can select the day and the count, and when we execute it, we will get a list of days and the related count of transfers for each day. And the information here was retrieved via a custom SQL query.

Thanks to the custom resolvers feature: the sky’s the limit, basically.

I hope you found this useful and I am looking forward to seeing what kind of creative use of this you’ll develop.